User Input and Your Network

A well-known saying in application security is to “never trust user input.” However, in practice, although we don't need to trust it, we must often handle the input in some manner. One method is to escape the output when displaying it back to the user. However, when it comes to making HTTP requests against user-provided URLs, the situation becomes much more complex.

A common occurrence of making web requests based on user input is found in webhooks, where an end user can request for an application to send a POST request to a designated URL whenever a specific action occurs. Another example is a chat app requiring a preview of a URL posted by a user, which includes a website summary and an embedded image.

SSRF

At first glance, it may seem acceptable to make web requests to obtain this information, but blindly returning information from any endpoint can be dangerous. For example, in most cloud infrastructure, short-lived access tokens are easily retrievable from a private IP address in order to allow SDKs to authenticate with no stored secrets. Returning the results from this endpoint could allow an attacker to act as if they were that service for a short period of time.

An attacker can exploit a vulnerability known as Server-Side Request Forgery (SSRF) by tricking a server into making a request. This type of vulnerability is becoming better understood as there has been an increase in incidents, leading to it being added to the OWASP Top 10 in 2021. The impact of SSRF vulnerabilities can vary greatly with your infrastructure and how it’s configured. Sometimes, attackers may use SSRF vulnerabilities to enumerate other resources behind a network perimeter. However, with modern cloud-based architectures, the impact can be much more severe. In the case of the Capital One hack in 2019, the attacker accessed the AWS metadata service via SSRF to obtain highly privileged credentials – this data breach exposed information of over 100 million users. Capital One ultimately paid $270 million to settle claims from bank regulators and customers.

If you haven’t prepared for the possibility of SSRF, attackers could easily gain access to sensitive information or even credentials for your cloud resources. GCP and Azure mitigate this issue by requiring a custom header, which serves as a more difficult barrier to get past. Next, I’ll explain how we handle this inside a Kubernetes cluster.

Hazard Control

What are some available methods of preventing your application from unintentionally sending requests to these endpoints? One option is to use a DNS blocklist, but that can be circumvented by a bad actor creating DNS aliases to the same private IP addresses. Another option is to use a blocklist for the IP addresses belonging to your internal endpoints, but that requires manually resolving DNS and knowing all the possible IP addresses of internal services that shouldn’t be queried (which are likely to change over time). After considering the tradeoff and complexities involved with the options proposed above, you might find yourself deciding to block all access to internal (RFC1918) IP address space.

Blocking private IP space solves the problem at the request origin, which means that only addresses on the public internet can be accessed, not those inside your cluster, VPC, or cloud provider. However, mapping out all current and future components of your application that have the ability to make HTTP requests can be difficult, so there's still the possibility of an unhandled case where an attacker could compromise the request and leak or expose information. To solve this, we prevent all unhandled traffic from reaching the network entirely.

Network Policies

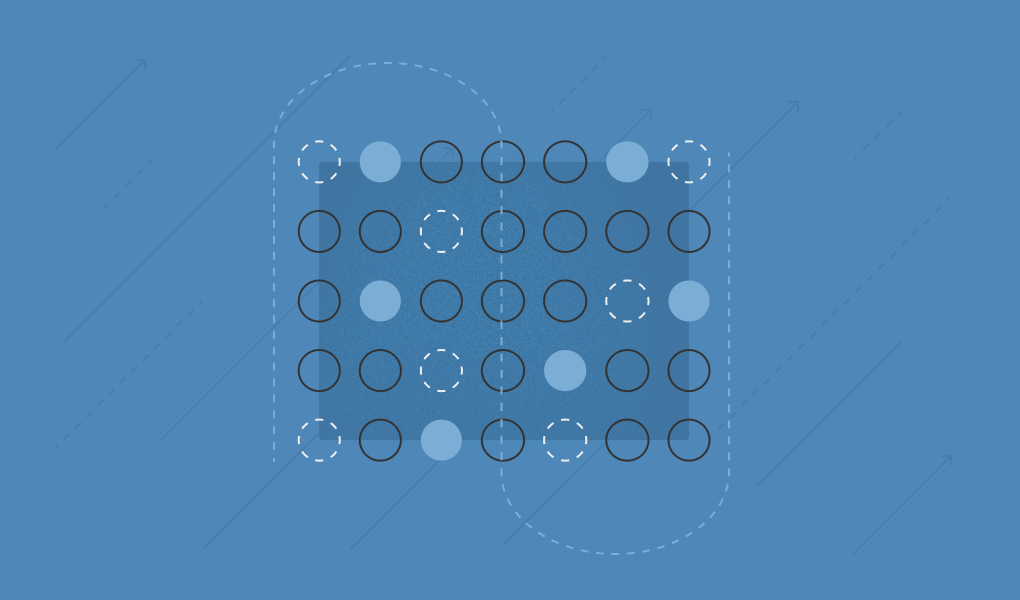

In Kubernetes, we can use network rules to control the behavior of incoming and outgoing traffic instead of relying on individual requests or the correctness of our application. Instead of allowing a pod to make any request it wants to the internet, we can restrict the pod from making any requests and have another service inside the cluster that proxies all internet-bound traffic. This way, your pod can be allowed to talk to that service, and that service can be allowed to talk to the internet but is banned from talking to internal services or even other pods in your cluster. The next step is configuring your cluster to make sure all your intentional requests go through the proxy.

SSRF Prevention at Material

Here are the steps we took at Material to harden our Kubernetes clusters against SSRF:

First, to prevent our Kubernetes pods, nodes, and other hosted resources (such as PostgreSQL) from being directly exposed to the public internet, we run them inside a private VPC with a Cloud NAT.

Second, to further restrict traffic from our GKE pods to other pods, known IP address blocks, or even the internet at large, we leverage NetworkPolicies on GKE’s Dataplane V2, which uses Cilium for cluster networking.

Finally, to let a set of allow-listed traffic out, we use Stripe’s Smokescreen. It’s a lightweight proxy written in Go with the intent of being a fast filter on internet-bound traffic. Then, in our application, we instrumented every place where HTTP requests can be made to get their own authorization, so the logs in Smokescreen can identify traffic sources and be used to filter out any requests they shouldn’t be making.

In order to keep things performant, we run Smokescreen in a DaemonSet so each node has its own instance. The applications running on that node contact the node’s IP on a known port in order to keep traffic local. This has the added benefit of reducing the number of hosts that punch through the NAT, lowering total port usage and the likelihood of needing multiple outbound NAT IPs.

End Result

There are a few caveats. If you’re using Workload Identity, requests to the metadata endpoint can’t be made through the proxy or you’d be using the proxy’s credentials on any action performed (if you could get to the internal IP in the first place). Thus in our case, non-application traffic to the metadata endpoint had to be added to an allowlist. Similarly, the Google APIs do not provide a clean way to use a proxy while requesting authentication without, so private.googleapis.com was also added to the allowlist.

This setup has proven to work well for us so far. Unsafe requests can’t be made directly to the internet from our application code, reducing the possibility of leaking information. Through Smokescreen, all of our outbound application traffic gets logged, giving us increased visibility into potential sources of malicious network traffic. This configuration gives us the ability to classify the who, what, and where of all traffic that leaves our network, while still safely being able to request user-provided URLs.

What’s Next

Defense-in-depth is a security mantra for good reason. There are no silver bullets. Any one security control may fail, so you should have layered defenses to reduce your risk. The controls we’ve outlined above prevent outbound application HTTP/S traffic from hitting internal services, but there are other SSRF attack vectors, such as other protocols (file://, gopher://, etc.) and indirect ways of generating requests like XML External Entity (XXE). Additional controls you may consider:

- Wherever users can input URLs, validate them to make sure that they match the expected protocols, such as http:// and https://.

- When parsing structured data like XML, make sure your library is configured not to parse external entities.

- Use AppArmor to prevent unwanted application behavior, such as reading arbitrary files.