At Material Security, our unique optional deployment model allows us to uphold rigorous standards for security and reliability by dedicating a separate Google Cloud Platform (GCP) instance for each of our customers. This total isolation of customer data and infrastructure establishes trust with our customers while also granting us the flexibility to customize features and deployment configurations on a per-tenant basis.

While our single-tenant model is incredibly valuable in most respects, it does present some challenges, especially when it comes to observability. Because all of our metrics are localized to customer-specific GCP instances, managing and viewing metrics across the entire fleet becomes complex.

In this post we'll explore recent enhancements to our observability capabilities and our journey towards establishing a unified control panel for monitoring the health and performance of our service.

Why is this important?

As our customer base continues to grow, the need for a scalable monitoring and alerting strategy becomes increasingly critical. Without a comprehensive view of our system’s performance across all customers, it is difficult to proactively identify and address service disruptions or regressions. With global observability, our Engineering team can more efficiently identify, root-cause and mitigate production issues, ultimately minimizing impact to our customers.

Observability at Material: Past vs. Present

In Material’s early days some thought was given to running the standard Prometheus, Grafana, and Alertmanager stack. However, given our optional distributed single-tenant deployment model, we would have had to either administer an entire copy of the stack for each customer (meaning 100s or 1000s of copies), or maintain a centralized Prometheus instance incapable of doing any project-level aggregation. Neither approach seemed scalable or reliable given the limitations of self-managed Prometheus. The issues of varying traffic volumes and isolation were also concerning. For example, if one customer project had 10 pods to scrape and another had 1000, we could not ensure that the smaller customers would not be drowned out if the scraping fell behind. Given these constraints, we opted for a stack composed mainly of built-in GCP tooling.

Today, our observability suite is comprised of the following:

- We use GCP’s Cloud Monitoring product to monitor the performance and health of our GCP infrastructure - from our Kubernetes workloads to our Postgres Cloud SQL instance to our PubSub backlogs.

- We emit structured application logs to Cloud Logging (formerly known as Stackdriver). These give us verbose information about things like what a given worker is doing at any point in time, the latency of a given operation, etc. We also export these logs to BigQuery using a Logging Sink configuration, giving us the ability to query the logs via SQL.

- Additionally, we use Log-based Metrics to build various time series metrics from our application logs (this was a workaround for installing an actual time series collector). For example, we use this framework to measure the time it takes for our system to become aware of an email or file (or a change thereto) via a log that is emitted at various checkpoints in our processing pipeline.

- Lastly, we also use Sentry for error reporting and distributed tracing. We send structured application logs to Sentry by creating a synthetic exception that contains exactly the data we want. In particular, we ensure that we never send PII to Sentry because we never send any organic exception objects (e.g. some low level library saying something like "Error: Can't parse string ${s}" and then dumping an email address or email body into an exception object). We ensure isolation across our customers by creating a DSN key for each one and assigning each key a share of our total Sentry quota. This ensures that one noisy customer can’t exhaust the shared quota. We also have the ability to instrument Sentry transactions in our codebase so we can deep dive into the performance profile of a particular workload or operation.

Up until recently, we did not have any practical, user-friendly, or cost-effective way to query for metrics across multiple customers. Whenever we wanted to see metric data for multiple customers, we’d have to navigate to their respective GCP projects and query the metric we cared about, or run a very expensive and manually-aggregated query. Aside from being obviously cumbersome, this approach also wouldn’t let us visualize – let alone alert on – changes in metrics across projects. For example, if we wanted to know that customers deployed with release X suddenly started seeing increased latency in a particular part of our syncing pipeline or that 2 or more projects were exhibiting the same symptoms of an issue, we would have to manually spot-check individual deployments.

These pain points ultimately compelled us to devise a strategy for establishing global observability. Over the last few months, we’ve invested in enhancing our cross-project telemetry using GCP’s Metrics Scopes as well as Managed Prometheus.

Aggregating metrics with Metrics Scopes

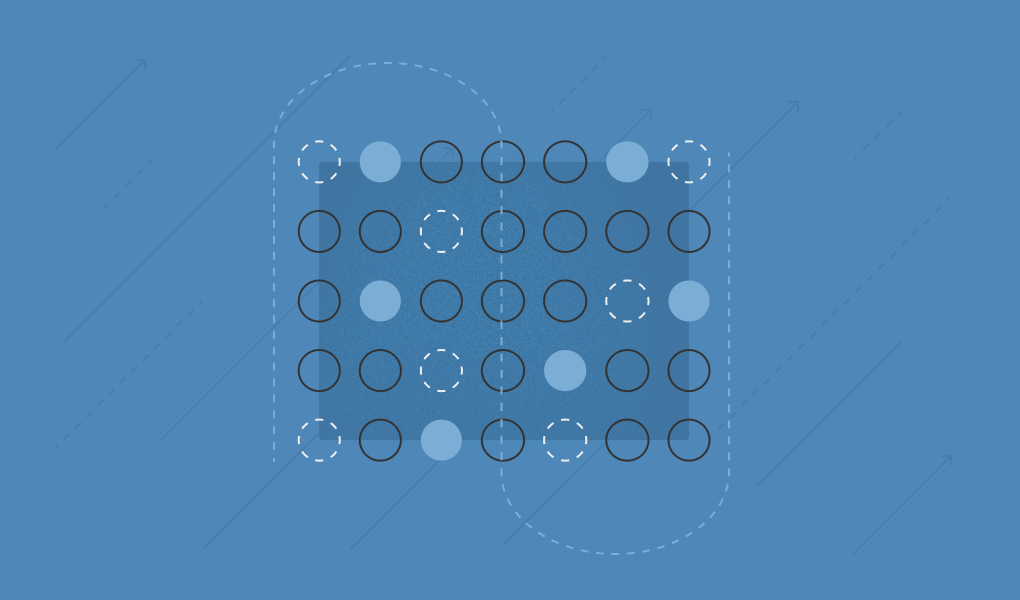

With Metrics Scopes, a single “scoping” project can pull and host metrics from up to 375 other projects. This allows us to visualize our GCP system metrics as well as our custom log-based metrics (but importantly, not the logs themselves) across our customer deployments. Keeping in stride with our infrastructure-as-code model, each customer GCP project is added to the metrics scope of a single monitoring project as part of our deployment scripts.

These scoping projects now let us aggregate metrics from all customer deployments within their metrics scope and slice and dice them using the project_id label.

Within these monitoring projects, we’ve built dashboards for visualizing metrics like message syncing performance across all instances, and have also started to build up fleet-wide alerting.

With this new cross-project observability, we saw potential for enhancing the way we build, manage, and ingest time series metrics. Introducing Prometheus into our observability stack was the next logical step, offering better flexibility and a smoother developer experience while optimizing our GCP spend.

Leveling up our metrics with Managed Prometheus

Our current log-based metrics are effective, but relying on log entries for time series metric samples is not the most efficient approach – most importantly, high-volume logging can significantly increase our GCP spend.

The Managed Prometheus offering for GKE allows us to scrape Prometheus metrics from our Kubernetes workloads and get all the same benefits of our existing metrics – i.e. we can query them in the Metrics Explorer view, build dashboards and write alerts (using PromQL!).

Our Typescript codebase now uses the prom-client for Node.js to export a set of default Node runtime metrics including event loop lag, garbage collection time and process CPU time. We can also define custom time-series metrics, including histograms, gauges, counters, and summaries. Creating a new time-series metric is now a simple definition in the code where we can specify the metric type, the labels to use to annotate the metric, as well as some custom configuration like the buckets configuration for a histogram.

Using Managed Prometheus we also export metrics from pgbouncer, our PostgreSQL connection pooler.

Querying with PromQL

In addition to supporting out-of-the-box Prometheus metric management, GCP’s Monitoring product supports querying metrics and defining dashboards/alerts using Prometheus’ powerful (and even Turing-complete!) query language, PromQL. Using PromQL we’ve been able to build more intelligence into our alerting strategy – in many cases employing its ability to divide time series metrics.

For instance, we’ve used the power of PromQL to alert on a growing backlog in one of our PubSub topics by taking into account the backlog size and the relative rate of producers publishing to the topic and subscribers processing from the topic. This ratio should tell us approximately when we expect to be totally caught up with a given backlog if we continue processing at the current rate. Whereas previously we would alert on the oldest unacked message age metric approaching PubSub’s default retention limit (7 days), we are now able to preemptively identify and act on a growing backlog instead of waiting until it has likely already caused issues for us.